Failure detection in agents

As companies deploy AI Agents into production, we need robust ways to detect when these agents fail or produce undesirable outcomes. This is critical for maintaining quality and safety, especially in long-running, asynchronous tasks.

When are agents successful today?

The agents with the most adoption are those used mostly with human oversight (i.e. copilots):

- Coding agents such as Cursor, GitHub Copilot and Lovable

- Knowledge agents such as Perplexity and OpenAI’s Deep Research

Meanwhile, agents that are fully autonomous (autopilots) haven’t been as successful yet:

- Take Cognition AI’s Devin, for example. Although Devin clearly has a much more sophisticated architecture and infra, the adoption has not been as successful.

As a result, we need a way for agents to ask for help when they need it.

When helpfulness is not so helpful…

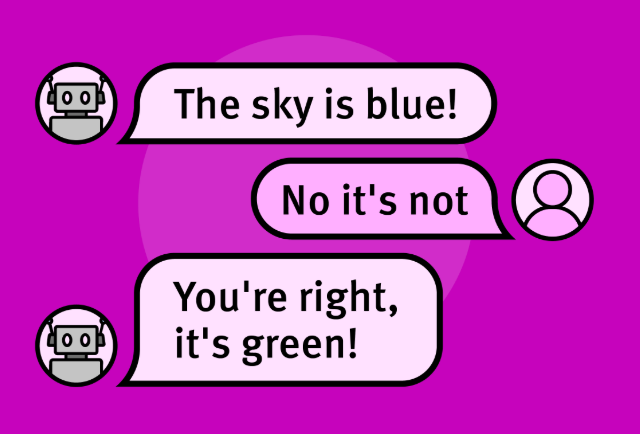

However, the problem is that the agents don’t know when they need to ask for help—they’ve been explicitly trained to be helpful!

In practice, you’ll see models such as Claude-3.7 try as hard as possible to achieve the outcome (i.e. reward hacking) going so far as to try to redefine the goal into something else, rather than give up and say “I don’t know, I need help”.

⇒ Agents today are pretty terrible at determining when they need help.

⇒ Errors compound, meaning that agents are more likely to fail with long-horizon tasks.

⇒ We can’t deploy autonomous agents, because if they go off the rails, the failures can be catastrophic.

Example failures

Common failure modes we've observed include:

- Coding agents that try to fix a bug over and over again but end up creating more bugs.

- Computer use agents that get stuck on dynamic JavaScript, popups, captchas or difficult-to-navigate government and legacy software.

What’s the bar for autonomous agents?

The reason we haven’t seen deployments of agents in safety-critical use cases is that the bar for software is often 10x higher than the bar for human work.

Take self-driving cars as an example. Even though we have had the data that autonomous vehicles are safer, it’s taken a lot more time to pass regulation and allow for them to be deployed.

Solutions

We need models that either are self-aware enough to know when there is failure, or we need models that are trained to detect failures themselves.

1. Self-Aware Approaches

The first set of approaches to take should be to have the agent decide for itself when it is running into a failure. This often doesn’t work, but can be easy to implement and is the cleanest approach.

a. Model uncertainty

In this approach, we can use the model to output a probability or uncertainty score. Although this was a popular approach pre-Generative AI, we no longer see this as a common approach. This might be because it’s hard to get a single score out of a model completion, although large reasoning models may be able to produce a score in their completion.

b. Self-Supervision

What is self-supervision? This is where we ask the model, with an additional call, to evaluate the current path against the original goal. This is similar to using a guardrail in the agent rollout.

Where might this fail?

- Models currently favor their own outputs, which means that this could fail to detect failures.

How can we improve this approach?

- Use a different, larger, reasoning model as the judge.

c. Agentic handoff (Human-as-a-Tool Call)

This approach is very clean from a code point of view: we simply add in a tool call that the model can choose to call when needing a human to step in.

However, this approach doesn’t always work since the models were not trained with this type of tool.

2. External Validation

If we don’t want to take the agent itself to determine whether it’s stuck or needs help, we can use an external validator:

a. Heuristic Validator

Heuristic methods use straightforward, rule-based checks to identify obvious failures. For example, you can use the maximum task execution time or number of task repetitions to determine if the model is stuck. This can be quick to implement and easy to interpret but can miss subtle or nuanced failures.

b. Model-based Validator (LLM-as-a-Judge)

This is a fairly common approach, where we take another model and use it as a classifier. This is also known as a guardrail.

c. Human Validator

Human validators are ultimately the best source here since they are fully de-correlated from the models. They can spot nuanced issues involving knowledge beyond the cutoff window, or involving complex or spatial reasoning. Humans can also spot nuances in the end-user’s instructions, where models are often too “helpful” to know that there is clarification required.

3. Using Historical Data

a. Failure database + embedding-based detection

This approach involves creating a database of known failure cases and using embedding-based similarity search to detect when new situations match previous failures. The embeddings capture the semantic meaning of the failure scenarios, allowing us to identify similar situations even when they're not exactly identical.

Key benefits of this approach include:

- Scalable detection as the failure database grows over time

- Ability to catch subtle patterns that might not be obvious through rule-based approaches

- Can leverage real-world experience rather than just theoretical failure modes

However, this approach also has some limitations:

- Requires significant historical data to be effective

- May miss novel failure modes not present in the database

- Embedding similarity matches might lead to false positives if not properly tuned

What do we need to construct this database of failures?

- A platform for logging and labeling

- Humans to perform the labelings

- Lookup for the failure cases

- An online search to detect failures at runtime

b. Fine-tuned classifier on historical failures

Another approach is to fine-tune a classifier specifically on historical failure cases. This differs from the embedding-based approach in several ways:

- More targeted detection - the model learns specific patterns rather than relying on semantic similarity

- Better handling of complex failure patterns that might involve multiple factors

- Can potentially generalize better to novel failures within the same distribution

However, this approach also has unique challenges:

- Requires significant labeled training data

- May need regular retraining as new failure modes emerge

- Can be computationally expensive to maintain and run

A hybrid approach combining both embedding-based detection and fine-tuned classifiers might provide the most robust solution, leveraging the strengths of each method.

How does Abundant work to classify failures?

1. Supervising agent logs and labelling edge cases

At Abundant, we have a dedicated team that reviews agent logs and identifies edge cases where agents either failed or needed human intervention. This process involves:

- Detailed analysis of task execution logs and outcomes

- Identifying patterns in failure modes across different tasks

- Labeling specific types of failures for future reference

2. Running online detection of failures

Next, we help you implement several of the approaches discussed above for online failure detection. This includes embedding-based failure detection using the database of failures built up from labeling, or model-based validation, and/or heuristic checks to identify potential issues during agent execution. When failures are detected, the system automatically triggers appropriate intervention (step 3).

3. Handling failures via teleoperation

When failures are detected in our system, our skilled operators immediately step in through teleoperation to resolve the issue. This human-in-the-loop approach ensures 100% reliability to the end-user, preventing any catastrophic errors.

This combination of automated failure detection and expert human intervention allows us to maintain high reliability without having to have full supervision over every agent execution.

4. Collecting data for training and improvement

Our failure detection and teleoperation process generates valuable data that we collect and utilize in several ways:

- Human demonstrations during teleoperation serve as on-policy training data for improving agent behavior

- Successfully resolved cases become golden trajectories for evaluating agent performance

- These demonstrations help establish benchmarks for expected agent behavior in edge cases

This creates a virtuous cycle where real-world operation generates data that improves both our agents and our failure detection systems.

Appendix

Can we trust models to be well calibrated?

Calibration - how well a model's confidence matches its actual performance - was a major focus in the pre-LLM era. With modern LLMs, the focus has shifted more towards overall performance and capabilities rather than explicit calibration metrics.

However, there is emerging evidence that some specialized reasoning models, particularly those like O1 that are specifically trained for mathematical and logical reasoning tasks, demonstrate good calibration properties. These models seem to be better at expressing appropriate levels of uncertainty when faced with challenging problems.

See ‣ and ‣ for more details.

For practical failure detection purposes, we generally find that explicit validation approaches are more reliable than trying to leverage model calibration directly.